5D Covariance Tracing for Efficient Defocus and Motion Blur

Laurent Belcour, Cyril Soler, Kartic Subr, Nicolas Holzschuch, Frédo Durand

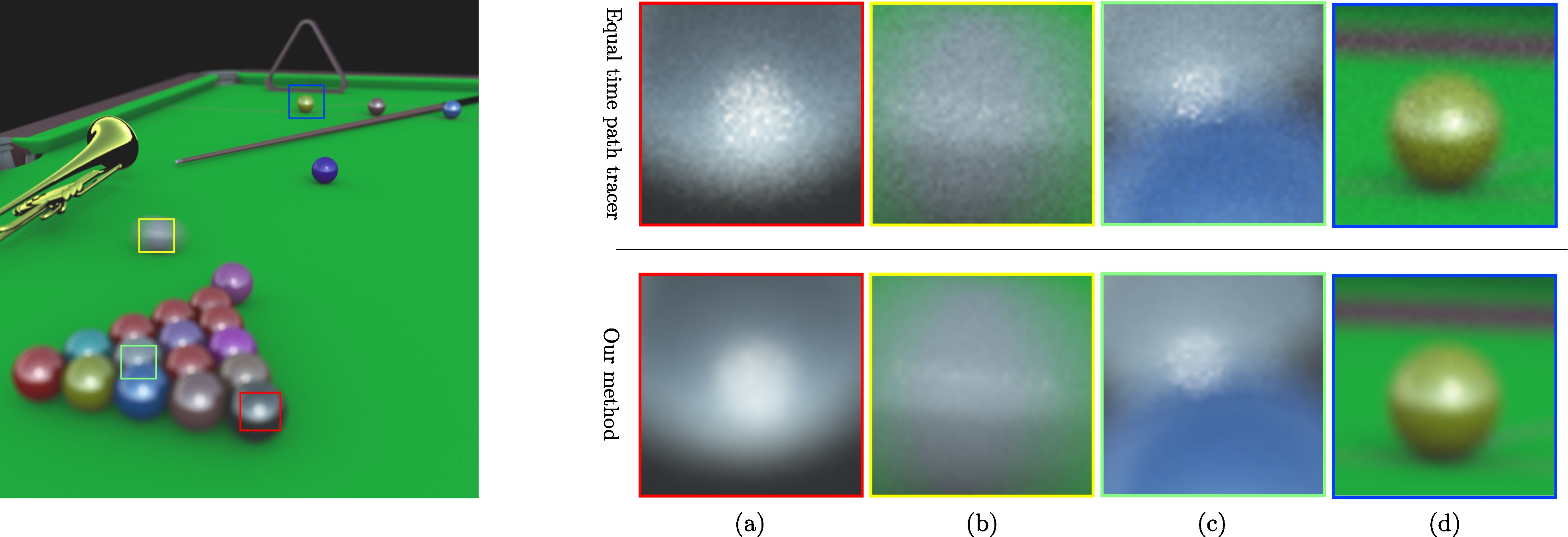

The rendering of effects such as motion blur and depth-of-field requires costly 5D integrals. We dramatically accelerate their computation through adaptive sampling and reconstruction based on the prediction of the anisotropy and bandwidth of the integrand. For this, we develop a new frequency analysis of the 5D temporal light-field, and show that first-order motion can be handled through simple changes of coordinates in 5D. We further introduce a compact representation of the spectrum using the covariance matrix and Gaussian approximations. We derive update equations for the 5 by 5 covariance matrices for each atomic light transport event, such as transport, occlusion, BRDF, texture, lens, and motion. The focus on atomic operations makes our work general, and removes the need for special-case formulas. We present a new rendering algorithm that computes 5D covariance matrices on the image plane by tracing paths through the scene, focusing on the single-bounce case. This allows us to reduce sampling rates when appropriate and perform reconstruction of images with complex depth-of-field and motion blur effects.

downloads: .pdf, .bib .pptx (Note: presentation to come)

Errata: the lens matrix (Table II (g)) is incorrect. This matrix should have the following diagonal [1-d/f, 1-d/f, 1, 1, 1]. The remaining coefficients (non-diagonals) are correct. Thanks to Jacob Munkberg for pointing the inconsistency.